Exposing services to Tailscale in Kubernetes

The internal IP of Kubernetes cluster services can be exposed to Tailscale, allowing applications from other clusters or machines to communicate directly with the service.

This is useful for example 2 different clusters which are not exposed to the Internet. Frontend applications on cluster A can communicate directly with backend services on cluster B. No need to expose to any services to Internet, using reverse proxy or Skupper to achieve this.

Requirements

- 2 separate Kubernetes clusters

- At least 1 cluster can install Tailscale Operator

- Cluster admin permission on both clusters

In below documentation, cluster A will be installed with Tailscale Operator, while cluster B will be deployed with Tailscale Subnet Router deployments.

Deploying Tailscale Operator

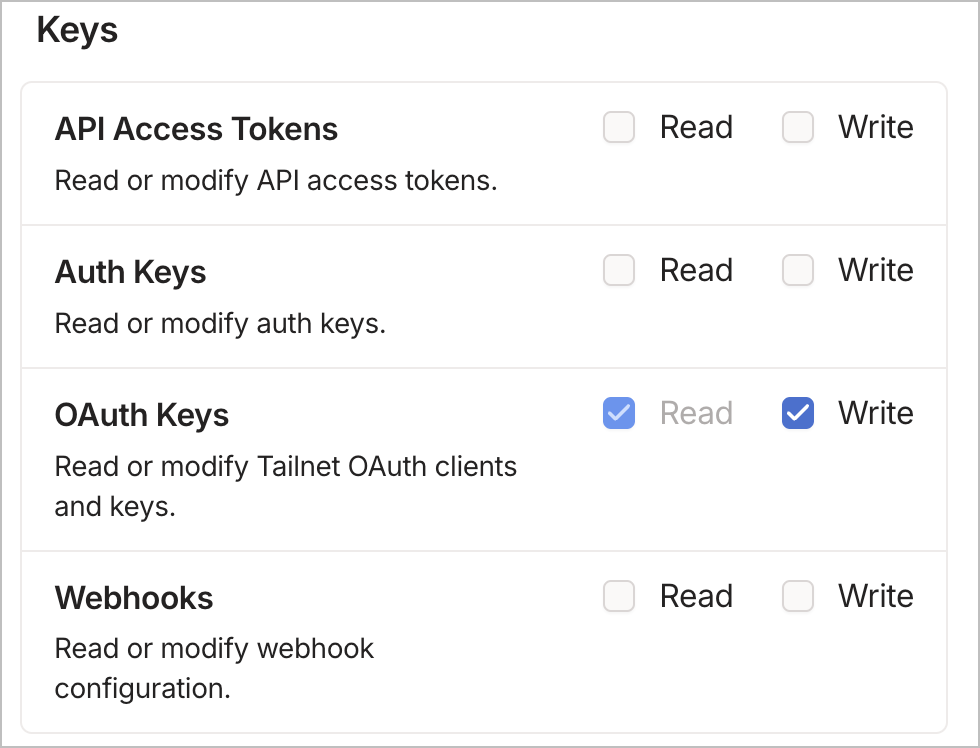

Follow the instructions in Secure Kubernetes Access with Tailscale: A Comprehensive Guide to deploy Tailscale Operator in cluster A. The only difference is that when generating the OAuth client key in Tailscale admin console, instead of ticking Read/Write permission for Devices, we need to only grant auth_keys scope instead.

This is due to outdated article after Tailscale introduced fine grained permissions. The rest of the steps in the article remain the same.

Deploying Tailscale Proxy Router

Similarly, follow the steps in Secure Kubernetes Access with Tailscale: A Comprehensive Guide to deploy Tailscale Proxy in cluster B. While the example in the article is for a Pod deployment, the same configuration can be converted to a deployment manifest as well.

Note: Each container in a Tailscale router must reference a unique secret in the environment variable. This is because Tailscale router will update the contents of the secret with additional information such as node ID after joining the tailnet. As such, the permissions in the created Kubernetes role during set up of Tailscale in Kubernetes need to be amended to include any additional secrets created.

Once the Tailscale pod/deployment is created, and successfully connected to Tailscale, the node will join our tailnet and show up in Tailscale admin console as a new node.

Creating Services and IngressRoute

Below steps are performed in cluster A, where the frontend applications will communicate with backend services in cluster B.

Creating Service

- Create a service with the following contents:

apiVersion: v1

kind: Service

metadata:

annotations:

tailscale.com/tailnet-fqdn: <Tailscale MagicDNS name>

name: rds-staging # service name

spec:

externalName: placeholder # any value - will be overwritten by operator

type: ExternalName

Note: The tailscale magic DNS must be the full DNS name of the target node that can be retrieved from Tailscale admin console.

Once Service is created, Tailscale Operator will deploy a new pod in our cluster and connect to our Tailnet. This can be seen from Tailscale admin console as well. Applications can query this Service just like any other Kubernetes Services.

Creating IngressRoute

- Create the following IngressRoute to create a route to our service

apiVersion: traefik.io/v1alpha1

kind: IngressRoute

metadata:

name: rocket-clusterB

namespace: default

annotations:

kubernetes.io/ingress.class: traefik-internal

spec:

entryPoints:

- websecure

routes:

- match: Host(`chat.apps.ocp.internal.domain`)

kind: Rule

services:

- name: rocket-clusterB

port: 80

tls:

secretName: clusterB-tls-secret

Note: An optional tls secret name can be defined for TLS termination. The secret name must be created before hand.

Accessing Subnet Routes

Frontend applications in cluster A can also access IP addresses behind a subnet router in cluster B.

- Create a

ProxyClassCR

apiVersion: tailscale.com/v1alpha1

kind: ProxyClass

metadata:

generation: 2

name: accept-routes

spec:

tailscale:

acceptRoutes: true

- Create a service which reference this

ProxyClassCR

apiVersion: v1

kind: Service

metadata:

annotations:

tailscale.com/tailnet-ip: "<IP-behind-the-subnet-router>"

labels:

tailscale.com/proxy-class: accept-routes

name: ts-egress

spec:

externalName: unused

type: ExternalName

- Create a

IngressRouteCR and reference thisService

apiVersion: traefik.io/v1alpha1

kind: IngressRoute

metadata:

name: gitea-clusterB

annotations:

kubernetes.io/ingress.class: traefik-internal

spec:

entryPoints:

- websecure

routes:

- match: Host(`gitea.domain.local`)

kind: Rule

services:

- name: ts-egress

port: 3000

Note: Similarly with all IngressRoute, a custom tls certificate can be used for TLS termination in Traefik.